오답노트

[Keras] 연산 Layer 본문

#라이브러리 호출

import tensorflow as tf

from tensorflow import keras

import numpy as np

import pandas as pd

#데이터 불러오기

from sklearn.datasets import load_iris

iris = load_iris()

#데이터 분리

from sklearn.model_selection import train_test_split

x_train,x_val,y_train,y_val = train_test_split(x,y,test_size=0.3,random_state=2022)

x_train = pd.DataFrame(x_train,columns=iris.feature_names)

x_val = pd.DataFrame(x_val,columns=iris.feature_names)

x_train_s = x_train[['sepal length (cm)','sepal width (cm)']]

x_train_p = x_train[['petal length (cm)','petal width (cm)']]

x_val_s = x_val[['sepal length (cm)','sepal width (cm)']]

x_val_p = x_val[['petal length (cm)','petal width (cm)']]

#데이터 전처리

n_class = len(np.unique(y_train))

from tensorflow.keras.utils import to_categorical

y_train = to_categorical(y_train,n_class)

y_val = to_categorical(y_val,n_class)layers.Add

각 input을 더한다.

#레이어 선언

il_s = keras.layers.Input((2,))

h1_s = keras.layers.Dense(64,'relu')(il_s)

il_p = keras.layers.Input((2,))

h1_p = keras.layers.Dense(64,'relu')(il_p)

add_l = keras.layers.Add()([h1_s, h1_p])

ol = keras.layers.Dense(n_class,'softmax')(add_l)

#모델 선언

model = keras.models.Model([il_s,il_p],ol)

#모델 컴파일

model.compile(loss=keras.losses.categorical_crossentropy,

optimizer=keras.optimizers.Adam(),

metrics=['accuracy'])

#모델 확인

print(model.summary())

'''

Model: "model"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_1 (InputLayer) [(None, 2)] 0 []

input_2 (InputLayer) [(None, 2)] 0 []

dense (Dense) (None, 64) 192 ['input_1[0][0]']

dense_1 (Dense) (None, 64) 192 ['input_2[0][0]']

add (Add) (None, 64) 0 ['dense[0][0]',

'dense_1[0][0]']

dense_2 (Dense) (None, 3) 195 ['add[0][0]']

==================================================================================================

Total params: 579

Trainable params: 579

Non-trainable params: 0

__________________________________________________________________________________________________

'''

그림에서 확인해보면 Add 레이어의 output의 수는 64개이다 즉 두 개의 input layer를 각각 더해서 64개가 output 된다.

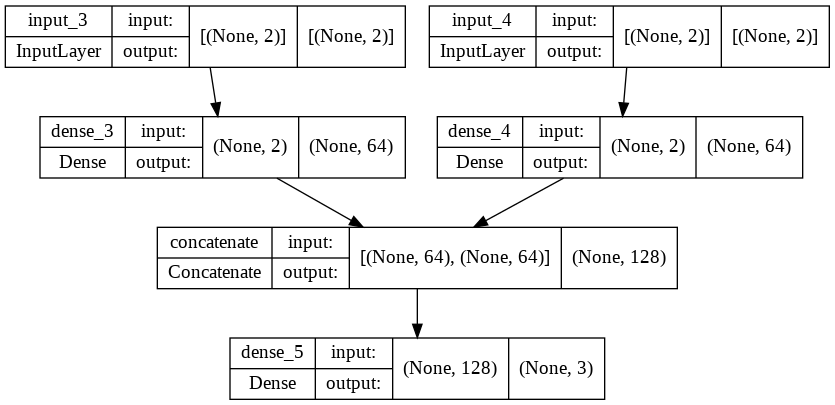

layers.Concatenate

각 input을 합친다.

#레이어 선언

il_s = keras.layers.Input((2,))

h1_s = keras.layers.Dense(64,'relu')(il_s)

il_p = keras.layers.Input((2,))

h1_p = keras.layers.Dense(64,'relu')(il_p)

con_l = keras.layers.Concatenate()([h1_s, h1_p])

ol = keras.layers.Dense(n_class,'softmax')(con_l)

#모델 선언

model = keras.models.Model([il_s,il_p],ol)

#모델 컴파일

model.compile(loss=keras.losses.categorical_crossentropy,

optimizer=keras.optimizers.Adam(),

metrics=['accuracy'])

#모델 확인

model.summary()

'''

Model: "model_1"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_3 (InputLayer) [(None, 2)] 0 []

input_4 (InputLayer) [(None, 2)] 0 []

dense_3 (Dense) (None, 64) 192 ['input_3[0][0]']

dense_4 (Dense) (None, 64) 192 ['input_4[0][0]']

concatenate (Concatenate) (None, 128) 0 ['dense_3[0][0]',

'dense_4[0][0]']

dense_5 (Dense) (None, 3) 387 ['concatenate[0][0]']

==================================================================================================

Total params: 771

Trainable params: 771

Non-trainable params: 0

__________________________________________________________________________________________________

'''

concatenate 레이어의 output을 보면 직전 layer들의 노드수를 더한 값과 같다.

Add는 노드의 값을 더했다면, Concatenate는 노드의 수를 더한다.

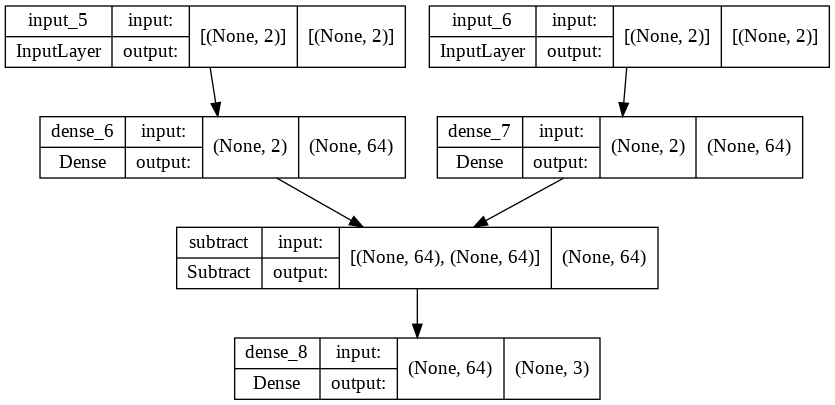

layers.Subtract

각 input을 뺀다.

#레이어 선언

il_s = keras.layers.Input((2,))

h1_s = keras.layers.Dense(64,'relu')(il_s)

il_p = keras.layers.Input((2,))

h1_p = keras.layers.Dense(64,'relu')(il_p)

sub_l = keras.layers.Subtract()([h1_s, h1_p])

ol = keras.layers.Dense(n_class,'softmax')(sub_l)

#모델 선언

model = keras.models.Model([il_s,il_p],ol)

#모델 컴파일

model.compile(loss=keras.losses.categorical_crossentropy,

optimizer=keras.optimizers.Adam(),

metrics=['accuracy'])

#모델 확인

model.summary()

'''

Model: "model_2"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_5 (InputLayer) [(None, 2)] 0 []

input_6 (InputLayer) [(None, 2)] 0 []

dense_6 (Dense) (None, 64) 192 ['input_5[0][0]']

dense_7 (Dense) (None, 64) 192 ['input_6[0][0]']

subtract (Subtract) (None, 64) 0 ['dense_6[0][0]',

'dense_7[0][0]']

dense_8 (Dense) (None, 3) 195 ['subtract[0][0]']

==================================================================================================

Total params: 579

Trainable params: 579

Non-trainable params: 0

__________________________________________________________________________________________________

'''

Add 와 반대로 노드의 값을 뺀다.

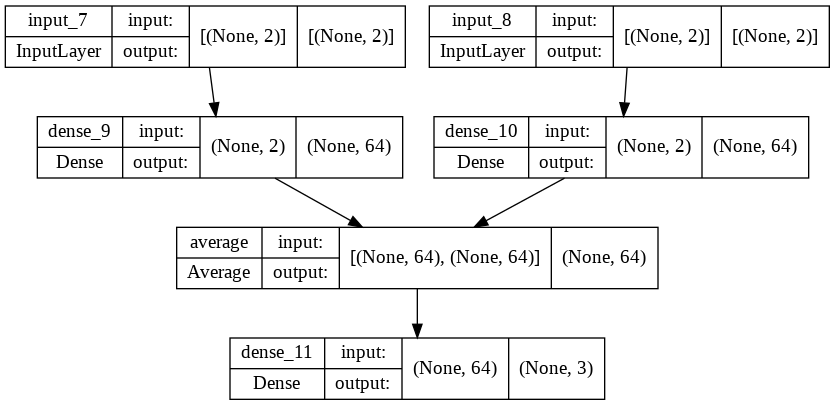

layers.Average

노드 값의 평균으로 연산한다.

#레이어 선언

il_s = keras.layers.Input((2,))

h1_s = keras.layers.Dense(64,'relu')(il_s)

il_p = keras.layers.Input((2,))

h1_p = keras.layers.Dense(64,'relu')(il_p)

avg_l = keras.layers.Average()([h1_s, h1_p])

ol = keras.layers.Dense(n_class,'softmax')(avg_l)

#모델 선언

model = keras.models.Model([il_s,il_p],ol)

#모델 컴파일

model.compile(loss=keras.losses.categorical_crossentropy,

optimizer=keras.optimizers.Adam(),

metrics=['accuracy'])

#모델 확인

model.summary()

'''

Model: "model_3"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_7 (InputLayer) [(None, 2)] 0 []

input_8 (InputLayer) [(None, 2)] 0 []

dense_9 (Dense) (None, 64) 192 ['input_7[0][0]']

dense_10 (Dense) (None, 64) 192 ['input_8[0][0]']

average (Average) (None, 64) 0 ['dense_9[0][0]',

'dense_10[0][0]']

dense_11 (Dense) (None, 3) 195 ['average[0][0]']

==================================================================================================

Total params: 579

Trainable params: 579

Non-trainable params: 0

__________________________________________________________________________________________________

'''

'Python > DL' 카테고리의 다른 글

| [DL] CNN (Convolutional Neural Network) (0) | 2022.09.19 |

|---|---|

| [Keras] 모델 성능에 유용한 Layer (0) | 2022.09.19 |

| [Keras] EarlyStopping (0) | 2022.09.15 |

| [Keras] Flatton Layer (0) | 2022.09.15 |

| [Keras] ANN (0) | 2022.09.14 |